This guide describes how to use esxcli to attach NVMe/TCP storage to a vSphere

host.

The guide is structured to get you up and running first with a single path to storage, later adding a second path to storage. Examples throughout show how we configured it in our lab, with a dual-port Mellanox ConnectX-5 NIC for the storage network. The major sections are as follows:

-

About NVMe briefly recounts the history of NVMe development and motivating factors for its use.

-

Networking describes how to configure NVMe/TCP networking for vSphere by enabling it on the physical NIC and the VMkernel NIC.

-

Connecting shows how to connect to an NVMe/TCP subsystem from vSphere both with discovery and by making a manual connection.

-

Multipath covers adding an additional path to storage using the High-Performance Plugin (HPP).

-

Quickstart is a quick reference for creating a single path to NVMe/TCP storage. If you’re familiar with NVMe/TCP generally and well-versed in VMware networking, consider skipping ahead to this section.

-

esxcli Storage Commands reviews the commands used in this guide and includes several other useful commands for dealing with NVMe-oF and NVMe/TCP storage in vSphere.

-

References links to several external sources for more information about NVMe/TCP and VMware.

VMware’s support for NVMe over TCP (NVMe/TCP) arrived in vSphere 7 update 3. You’ll need ESXi 7.0 Update 3c or newer to use it.

ABOUT NVMe

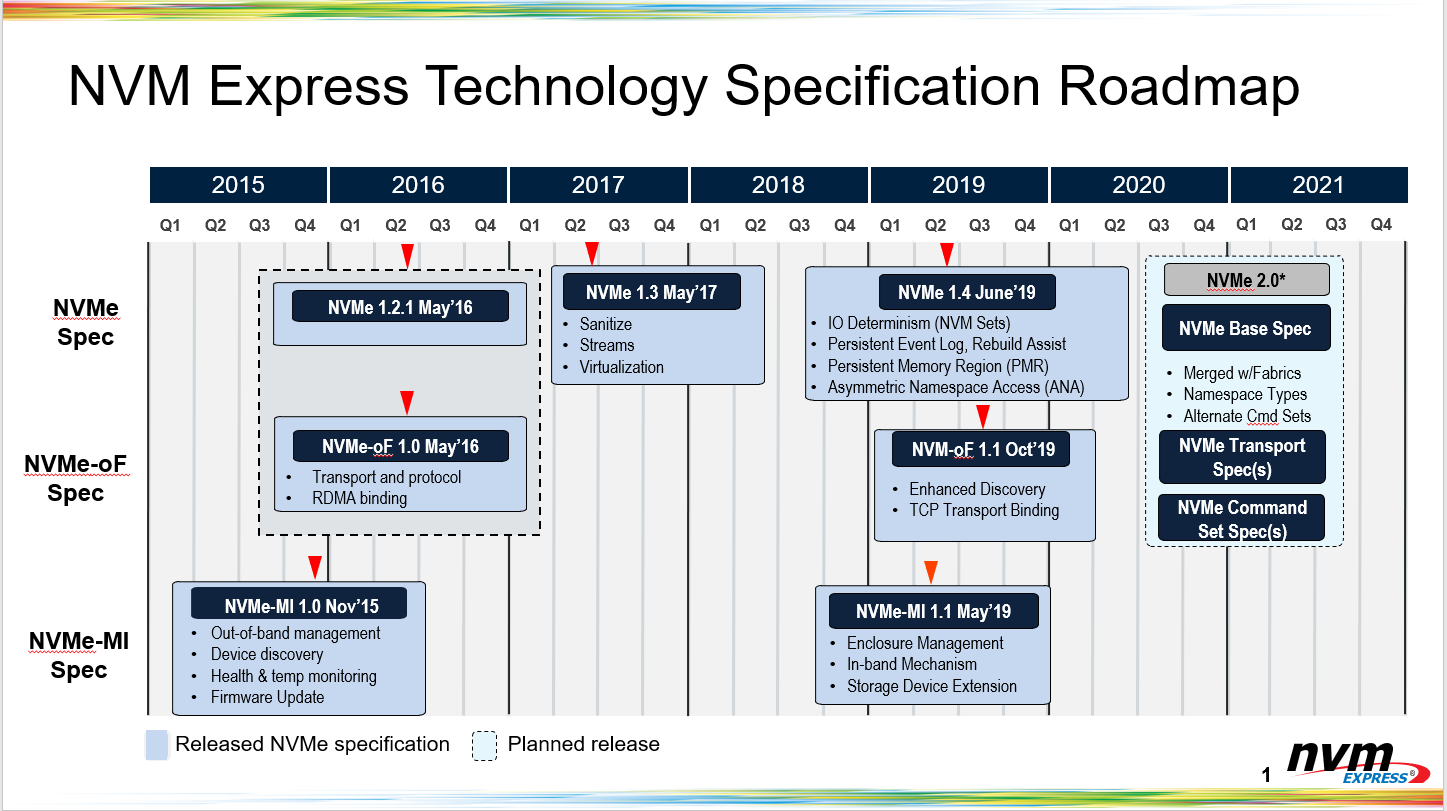

NVM Express (NVMe) is an interface specification for accessing non-volatile storage media, originally developed for media connected to a PCI Express bus. In 2017, the first NVMe over fabrics standard described how to use the NVMe protocol over Fibre Channel. The standard for NVMe over TCP/IP was ratified in late 2018 with minor updates in 2021 and 2022.

The NVMe-over-fabrics protocol is substantially simpler than SCSI and iSCSI. It leaves out much of SCSI’s task management and ordering requirements, not to mention 40 years of accumulated implementation baggage. It entirely omits iSCSI’s complex error recovery techniques that are rarely used in practice. The rise in NVMe and NVMe over fabrics has driven vendors to re-imagine their storage stacks, taking advantage of ubiquitously available high-core-count CPU to extract maximum performance from non-volatile media.

Today, NVMe/TCP is a compelling alternative to iSCSI/TCP and iSER. It brings a high-performance storage fabric to standard Ethernet gear without the complexities of deploying RDMA.

NETWORKING

Below, we assume that you have a functioning VMware networking stack. At a minimum, you will need a physical network interface card, a vSwitch with the physical NIC as its uplink, and a port group with a VMkernel NIC. This section walks you through configuring these components for NVMe/TCP to get a single path up.

Start configuring networking by identifying the appropriate physical NICs and

VMkernel NICs to use for NVMe/TCP traffic, using esxcli network nic list to

show the physical network interface cards. Below, you can see our system has a

two gigabit Ethernet ports for management and two 100GbE ports that we’ll use

for the storage data network. The high-speed links are set up for jumbo

frames, but that’s not a requirement for NVMe/TCP.

esxcli network nic list

Name PCI Device Driver Admin Status Link Status Speed Duplex MAC Address MTU Description

------ ------------ ---------- ------------ ----------- ------ ------ ----------------- ---- -------------------------------------------------------

vmnic0 0000:c1:00.0 ntg3 Up Up 1000 Full f4:02:70:f8:cd:8c 1500 Broadcom Corporation NetXtreme BCM5720 Gigabit Ethernet

vmnic1 0000:c1:00.1 ntg3 Up Up 1000 Full f4:02:70:f8:cd:8d 1500 Broadcom Corporation NetXtreme BCM5720 Gigabit Ethernet

vmnic2 0000:8f:00.0 nmlx5_core Up Up 100000 Full ec:0d:9a:83:27:ba 9000 Mellanox Technologies MT27800 Family [ConnectX-5]

vmnic3 0000:8f:00.1 nmlx5_core Up Up 100000 Full ec:0d:9a:83:27:bb 9000 Mellanox Technologies MT27800 Family [ConnectX-5]

We’ll start with vmnic2. You should have a standard vSwitch configured

with it as its uplink. Show the configured vSwitches with esxcli network

vswitch standard list.

esxcli network vswitch standard list

vSwitch0

Name: vSwitch0

Class: cswitch

Num Ports: 11776

Used Ports: 7

Configured Ports: 128

MTU: 1500

CDP Status: listen

Beacon Enabled: false

Beacon Interval: 1

Beacon Threshold: 3

Beacon Required By:

Uplinks: vmnic0

Portgroups: 100-net, Management Network

200-net

Name: 200-net

Class: cswitch

Num Ports: 11776

Used Ports: 7

Configured Ports: 1024

MTU: 9000

CDP Status: listen

Beacon Enabled: false

Beacon Interval: 1

Beacon Threshold: 3

Beacon Required By:

Uplinks: vmnic2 <--- uplink set to the physical NIC

Portgroups: 200-vm, 200-port <--- one portgroup to be used for NVMe/TCP

...

In our lab, the 200-net vSwitch is part of the storage network. The 200-port portgroup on this switch will be used for NVMe/TCP traffic.

Finally, use esxcli network ip interface list to show the VMkernel NICs.

Below, vmk1 is the one we want.

esxcli network ip interface list

vmk0

Name: vmk0

MAC Address: f4:02:70:f8:cd:8c

Enabled: true

Portset: vSwitch0

Portgroup: Management Network

Netstack Instance: defaultTcpipStack

VDS Name: N/A

VDS UUID: N/A

VDS Port: N/A

VDS Connection: -1

Opaque Network ID: N/A

Opaque Network Type: N/A

External ID: N/A

MTU: 9000

TSO MSS: 65535

RXDispQueue Size: 1

Port ID: 33554436

vmk1

Name: vmk1

MAC Address: 00:50:56:60:ce:b8

Enabled: true

Portset: 200-net

Portgroup: 200-port <--- portgroup on vSwitch above

Netstack Instance: defaultTcpipStack

VDS Name: N/A

VDS UUID: N/A

VDS Port: N/A

VDS Connection: -1

Opaque Network ID: N/A

Opaque Network Type: N/A

External ID: N/A

MTU: 9000

TSO MSS: 65535

RXDispQueue Size: 1

Port ID: 50331652

Enable NVMe/TCP on the Physical NIC

Next, enable NVMe/TCP on the physical NIC that participates in the storage

network: vmnic2. This step creates the vmhba storage adapter (Virtual

Machine Host Bus Adapter), which you will use in all subsequent interactions

with esxcli.

esxcli nvme fabrics enable --protocol TCP --device vmnic2

See that vSphere created the storage adapter:

esxcli nvme adapter list

Adapter Adapter Qualified Name Transport Type Driver Associated Devices

------- ------------------------------- -------------- ------- ------------------

vmhba67 aqn:nvmetcp-ec-0d-9a-83-27-ba-T TCP nvmetcp vmnic2

Later, when we configure multipathing, we’ll enable NVMe/TCP for the other physical NIC, creating a second NVMe-oF adapter. Note the contrast with Software iSCSI, which uses a single adapter for the entire host.

Enable NVMe/TCP on the VMkernel NIC

Finally, tag the VMkernel NIC for NVMe/TCP. This signals to the adapter on the underlying physical NIC that the IP address of the VMkernel NIC will be used for NVMe/TCP storage traffic.

esxcli network ip interface tag add --interface-name vmk1 --tagname NVMeTCP

CONNECTING

With the networking configuration in place, vSphere can now make an NVMe/TCP connection to the backing storage.

Discovery

Use discovery to show the list of NVMe subsystems available at the specified IP address. These subsystems have NVMe Qualified Names (NQNs), similar to iSCSI Qualified Names (IQNs). VMware doesn’t yet support authentication for NVMe-over-fabrics, so all you need is one IP address from your NVMe/TCP storage server. Here’s the result for a Blockbridge all-flash system at 172.16.200.120:

esxcli nvme fabrics discover --adapter vmhba67 --ip-address 172.16.200.120

Transport Type Address Family Subsystem Type Controller ID Admin Queue Max Size Transport Address Transport Service ID Subsystem NQN Connected

-------------- -------------- -------------- ------------- -------------------- ----------------- -------------------- ---------------------------------------------------------------- ---------

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19 false

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:9666a054-84bc-4937-8320-7b514464033f false

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:6ab51064-7e35-42b3-868e-e44f60097f2a false

esxcli nvme info get. To permit access, add the host NQN to your NVMe/TCP

storage system’s ACL. For Blockbridge, this command is bb profile initiator

add --name <nqn> --profile <profile>.For most NVMe/TCP systems, the controller ID shown in discovery is 65535.

This is how the NVMe subsystem indicates that it’s using the “dynamic

controller model”. It allocates a controller on demand for each new connection

to the admin queue.

To connect to all the subsystems shown above, simply add --connect-all to the

discovery command line.

esxcli nvme fabrics discover --adapter vmhba67 --ip-address 172.16.200.120 --connect-all

Transport Type Address Family Subsystem Type Controller ID Admin Queue Max Size Transport Address Transport Service ID Subsystem NQN Connected

-------------- -------------- -------------- ------------- -------------------- ----------------- -------------------- ---------------------------------------------------------------- ---------

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19 true

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:9666a054-84bc-4937-8320-7b514464033f true

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:6ab51064-7e35-42b3-868e-e44f60097f2a true

You can see the connected NVMe controllers here:

esxcli nvme controller list

Name Controller Number Adapter Transport Type Is Online

-------------------------------------------------------------------------------------------- ----------------- ------- -------------- ---------

nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19#vmhba67#172.16.200.120:4420 15684 vmhba67 TCP true

nqn.2009-12.com.blockbridge:9666a054-84bc-4937-8320-7b514464033f#vmhba67#172.16.200.120:4420 8972 vmhba67 TCP true

nqn.2009-12.com.blockbridge:6ab51064-7e35-42b3-868e-e44f60097f2a#vmhba67#172.16.200.120:4420 5883 vmhba67 TCP true

Connect

Sometimes you won’t want vSphere to connect to every subsystem available. To

make a manual connection to a single subsystem, supply the subsystem’s NQN on

the connect command line:

esxcli nvme fabrics connect --adapter vmhba67 --ip-address 172.16.200.120 --subsystem-nqn nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19

Additional Connect-Time Options

The following options apply to both esxcli nvme fabrics connect and esxcli

nvme fabrics discover:

-

Header and data digests (

--digest): When supported by the backing storage, you can enable header and/or data digests to guard against transport-level corruption.0: disable digests 1: header digests 2: data digests 3: header and data digests -

Number of I/O Queues (

--io-queue-number): By default, VMware makes one I/O queue connection per CPU core, topping out at 8 I/O queues on an 8+ CPU core system. You can request more queues, but it will not exceed the CPU core count. You may wish to limit this further on high core count systems. -

I/O Queue Size (

--io-queue-size): NVMe devices generally support many more outstanding commands than iSCSI or Fibre Channel. By default, VMware requests a queue size of 256. Note that this setting for each I/O queue independently.

MULTIPATH

The best summary of the way vSphere thinks about multipathing is found by

listing the devices in the storage plugin. For NVMe, this is the HPP plugin,

and the command is esxcli storage hpp device list:

esxcli storage hpp device list

eui.1962194c4062640f0a010a009fbd331e

Device Display Name: NVMe TCP Disk (eui.1962194c4062640f0a010a009fbd331e)

Path Selection Scheme: LB-RR

Path Selection Scheme Config: {iops=1000,bytes=10485760;}

Current Path: vmhba67:C0:T0:L0

Working Path Set: vmhba67:C0:T0:L0

Is SSD: true

Is Local: false

Paths: vmhba67:C0:T0:L0

Use ANO: false

In the example above, there’s one path to the connected storage device, via

vmhba67:C0:T0:L0. By default, it’s configured with round-robin load balancing

(LB-RR) and switches paths every 1,000 IOPS or 10 MiB of data, whichever

comes first.

Path Selection Policy Tuning

We recommend lowering both the IOPS and bytes numbers for the round-robin path policy. Selecting the next path after 32 I/O’s or 512 KiB will allow vSphere to more effectively use two or more active paths.

esxcli storage hpp device set --device eui.1962194c4062640f0a010a009fbd331e --pss LB-RR --iops 32 --bytes 524288

Adding a Second Path

To add a second path, follow the workflow described in the earlier Networking section. Then, connect to the endpoint on the other subnet from the second port’s adapter.

-

Enable NVMe/TCP on the other NIC port:

esxcli nvme fabrics enable --protocol TCP --device vmnic3 -

List the storage adapters to verify that a second NVMe vmhba exists:

esxcli nvme adapter list Adapter Adapter Qualified Name Transport Type Driver Associated Devices ------- ------------------------------- -------------- ------- ------------------ vmhba67 aqn:nvmetcp-ec-0d-9a-83-27-ba-T TCP nvmetcp vmnic2 vmhba68 aqn:nvmetcp-ec-0d-9a-83-27-bb-T TCP nvmetcp vmnic3 -

Enable NVMe/TCP on the VMkernel NIC for the second NIC port:

esxcli network ip interface tag add --interface-name vmk2 --tagname NVMeTCP -

Connect to the other portal on backend NVMe/TCP storage:

esxcli nvme fabrics connect --adapter vmhba68 --ip-address 172.16.201.120 --subsystem-nqn nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19 -

List the NVMe controllers. There should be two of them now:

esxcli nvme controller list Name Controller Number Adapter Transport Type Is Online --------------------------------------------------------------------- ----------------- ------- -------------- --------- nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19#vmhba67#172.16.200.45:4420 428 vmhba67 TCP true nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19#vmhba67#172.16.201.45:4420 429 vmhba68 TCP true

Finally, esxcli storage hpp device list shows two paths, one accessible to

each adapter.

esxcli storage hpp device list

eui.1962194c4062640f0a010a009fbd331e

Device Display Name: NVMe TCP Disk (eui.1962194c4062640f0a010a009fbd331e)

Path Selection Scheme: LB-RR

Path Selection Scheme Config: {iops=32,bytes=524288;}

Current Path: vmhba68:C0:T0:L0

Working Path Set: vmhba68:C0:T0:L0, vmhba67:C0:T0:L0

Is SSD: true

Is Local: false

Paths: vmhba68:C0:T0:L0, vmhba67:C0:T0:L0

Use ANO: false

QUICKSTART

Looking to get up and running quickly? This is a quick reference for configuring a single path to NVMe/TCP storage with VMware. For the detailed explanation, proceed to the Networking section.

- Select the physical NICs and portgroups.

- Enable support for NVMe/TCP.

- Connect to your backing storage with a single path.

On completion of this workflow, visit the Multipath section below to configure additional paths.

Select Physical NICs and Portgroups

-

Identify the physical NIC you will use for NVMe/TCP storage.

esxcli network nic list Name PCI Device Driver Admin Status Link Status Speed Duplex MAC Address MTU Description ------ ------------ ---------- ------------ ----------- ------ ------ ----------------- ---- ------------------------------------------------------- vmnic2 0000:8f:00.0 nmlx5_core Up Up 100000 Full ec:0d:9a:83:27:ba 9000 Mellanox Technologies MT27800 Family [ConnectX-5] -

Identify the standard vSwitch with an uplink set to the physical NIC. This vSwitch connects your portgroup to the physical NIC.

esxcli network vswitch standard list 200-net Name: 200-net Class: cswitch Num Ports: 11776 Used Ports: 7 Configured Ports: 1024 MTU: 9000 CDP Status: listen Beacon Enabled: false Beacon Interval: 1 Beacon Threshold: 3 Beacon Required By: Uplinks: vmnic2 <--- uplink set to the physical NIC Portgroups: 200-vm, 200-port <--- one portgroup to be used for NVMe/TCP -

Identify the VMkernel NIC that you will use. Its portgroup name must be on the list of portgroups in the vSwitch above.

esxcli network ip interface list vmk1 Name: vmk1 MAC Address: 00:50:56:60:ce:b8 Enabled: true Portset: 200-net Portgroup: 200-port <--- portgroup on vSwitch above Netstack Instance: defaultTcpipStack VDS Name: N/A VDS UUID: N/A VDS Port: N/A VDS Connection: -1 Opaque Network ID: N/A Opaque Network Type: N/A External ID: N/A MTU: 9000 TSO MSS: 65535 RXDispQueue Size: 1 Port ID: 50331652

Enable Support for NVMe/TCP

-

Create the NVMe/TCP “vmhba” adapter for your physical NIC:

esxcli nvme fabrics enable --protocol TCP --device vmnic2 -

View the created vmhba:

esxcli nvme adapter list Adapter Adapter Qualified Name Transport Type Driver Associated Devices ------- ------------------------------- -------------- ------- ------------------ vmhba67 aqn:nvmetcp-ec-0d-9a-83-27-ba-T TCP nvmetcp vmnic2 -

Set the VMkernel NIC to permit NVMe/TCP services:

esxcli network ip interface tag add --interface-name vmk1 --tagname NVMeTCP

Connect to NVMe/TCP Storage

-

Connect to the NVMe/TCP portal on your backend NVMe/TCP storage:

esxcli nvme fabrics connect --adapter vmhba67 --ip-address 172.16.200.120 --subsystem-nqn nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19 -

Show the NVMe controller:

esxcli nvme controller list Name Controller Number Adapter Transport Type Is Online --------------------------------------------------------------------- ----------------- ------- -------------- --------- nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19#vmhba67#172.16.200.45:4420 428 vmhba67 TCP true -

Show the NVMe namespaces available to the controller:

esxcli nvme namespace list Name Controller Number Namespace ID Block Size Capacity in MB ------------------------------------ ----------------- ------------ ---------- -------------- eui.1962194c4062640f0a010a009fbd331e 428 1 512 40000

ESXCLI STORAGE COMMANDS

This section has a review of the commands used above, plus a few extra useful commands.

esxcli network ip interface tag add --interface-name <vmkernel nic> --tagname NVMeTCPⓘesxcli nvme adapter listⓘesxcli nvme controller identify --controller <controller name>ⓘesxcli nvme controller listⓘesxcli nvme fabrics connect --adapter <adapter> --ip-address <ip> --subsystem-nqn <nqn>ⓘesxcli nvme fabrics disconnect --adapter <adapter> --controller-number <controller number>ⓘesxcli nvme fabrics discover --adapter <adapter> --ip-address <storage ip>ⓘesxcli nvme fabrics enable --protocol <transport protocol> --device <physical nic>ⓘesxcli nvme info getⓘesxcli nvme namespace identify --namespace <namespace eui or nguid>ⓘesxcli storage core device vaai status getⓘesxcli storage core path listⓘesxcli storage hpp device listⓘesxcli storage hpp device set --device <device> --pss <path selection scheme> --iops <iops> --bytes <bytes>ⓘesxcli system module parameters list --module <vmknvme|nvmetcp>ⓘ

How to Enable NVMe/TCP on a Physical NIC

esxcli nvme fabrics enable --protocol TCP --device vmnic2

How to Tag a VMkernel NIC to permit NVMe/TCP Traffic

esxcli network ip interface tag add --interface-name vmk1 --tagname NVMeTCP

How to List the NVMe-oF Adapters

esxcli nvme adapter list

Adapter Adapter Qualified Name Transport Type Driver Associated Devices

------- ------------------------------- -------------- ------- ------------------

vmhba67 aqn:nvmetcp-ec-0d-9a-83-27-ba-T TCP nvmetcp vmnic2

How to Show the Host NQN

esxcli nvme info get

Host NQN: nqn.2014-08.localnet:nvme:localhost

How to List the NVMe Controllers

esxcli nvme controller list

Name Controller Number Adapter Transport Type Is Online

--------------------------------------------------------------------- ----------------- ------- -------------- ---------

nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19#vmhba67#172.16.200.45:4420 428 vmhba67 TCP true

How to Discover NVMe/TCP Subsystems

esxcli nvme fabrics discover --adapter vmhba67 --ip-address 172.16.200.120

Transport Type Address Family Subsystem Type Controller ID Admin Queue Max Size Transport Address Transport Service ID Subsystem NQN Connected

-------------- -------------- -------------- ------------- -------------------- ----------------- -------------------- ---------------------------------------------------------------- ---------

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19 false

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:9666a054-84bc-4937-8320-7b514464033f false

TCP IPv4 NVM 65535 32 172.16.200.120 4420 nqn.2009-12.com.blockbridge:6ab51064-7e35-42b3-868e-e44f60097f2a false

How to Tune The Path Selection Policy

With extremely low latency, NVMe/TCP storage may benefit from lowering both the number of IOPS and bytes before switching to another active path.

esxcli storage hpp device set --device eui.1962194c4062640f0a010a009fbd331e --pss LB-RR --iops 32 --bytes 524288

How to Connect to NVMe/TCP Storage

esxcli nvme fabrics connect --adapter vmhba68 --ip-address 172.16.201.120 --subsystem-nqn nqn.2009-12.com.blockbridge:053519e1-fca6-4cb4-bb31-ece4ce9c5c19

How to Disconnect an NVMe-oF Controller

esxcli nvme fabrics disconnect --controller-number 428 --adapter vmhba67

How to List the Connected Paths to Storage

esxcli storage core path list shows the details of each individual path to

each storage device.

esxcli storage core path list

tcp.vmnic2:00:0c:29:93:a1:0c-tcp.unknown-eui.21e816ae2707494d98a183e50273c922

UID: tcp.vmnic2:00:0c:29:93:a1:0c-tcp.unknown-eui.21e816ae2707494d98a183e50273c922

Runtime Name: vmhba67:C0:T0:L0

Device: eui.21e816ae2707494d98a183e50273c922

Device Display Name: NVMe TCP Disk (eui.21e816ae2707494d98a183e50273c922)

Adapter: vmhba67

Channel: 0

Target: 0

LUN: 0

Plugin: HPP

State: active

Transport: tcp

Adapter Identifier: tcp.vmnic2:00:0c:29:93:a1:0c

Target Identifier: tcp.unknown

Adapter Transport Details: Unavailable or path is unclaimed

Target Transport Details: Unavailable or path is unclaimed

Maximum IO Size: 32768

How to List the HPP Storage Paths

As an alternative view, esxcli storage hpp device list shows a summary of the

paths available to each storage device.

esxcli storage hpp device list

eui.1962194c4062640f0a010a009fbd331e

Device Display Name: NVMe TCP Disk (eui.1962194c4062640f0a010a009fbd331e)

Path Selection Scheme: LB-RR

Path Selection Scheme Config: {iops=32,bytes=524288;}

Current Path: vmhba68:C0:T0:L0

Working Path Set: vmhba68:C0:T0:L0, vmhba67:C0:T0:L0

Is SSD: true

Is Local: false

Paths: vmhba68:C0:T0:L0, vmhba67:C0:T0:L0

Use ANO: false

How to Show the NVMe Identify Controller Data

esxcli nvme controller identify --controller nqn.2009-12.com.blockbridge:t-pjxazxb-mike#vmhba67#172.16.200.45:4420

Name Value Description

--------- ------------------- -----------

VID 0xbbbb PCI Vendor ID

SSVID 0xbbbb PCI Subsystem Vendor ID

SN TGT1D62194C4062647A Serial Number

MN Blockbridge Model Number

FR 5.3.0 Firmware Revision

RAB 0x3 Recommended Arbitration Burst

IEEE 0a010a IEEE OUI Identifier

CMIC 0xb Controller Multi-Path I/O and Namespace Sharing Capabilities

MDTS 0x3 Maximum Data Transfer Size

CNTLID 0x0 Controller ID

VER 2.0.0 Version

RTD3R 0x0 RTD3 Resume Latency

RTD3E 0x0 RTD3 Entry Latency

OAES 0x900 Optional Asynchronous Events Supported

CTRATT 0x80 Controller Attributes

OACS 0x0 Optional Admin Command Support

ACL 0xff Abort Command Limit

AERL 0x3 Asynchronous Event Request Limit

FRMW 0x3 Firmware Updates

LPA 0x26 Log Page Attributes

ELPE 0x0 Error Log Page Entries

NPSS 0x0 Number of Power States Support

AVSCC 0x0 Admin Vendor Specific Command Configuration

APSTA 0x0 Autonomous Power State Transition Attributes

WCTEMP 0x0 Warning Composite Temperature Threshold

CCTEMP 0x0 Critical Composite Temperature Threshold

MTFA 0x0 Maximum Time for Firmware Activation

HMPRE 0x0 Host Memory Buffer Preferred Size

HMMIN 0x0 Host Memory Buffer Minimum Size

TNVMCAP 0x0 Total NVM Capacity

UNVMCAP 0x0 Unallocated NVM Capacity

RPMBS 0x0 Replay Protected Memory Block Support

EDSTT 0x0 Extended Device Self-test Time

DSTO 0x0 Device Self-test Options

FWUG 0x0 Firmware Update Granularity = Value*4KB (0xff -> no restriction)

KAS 0x64 Keep Alive Support

HCTMA 0x0 Host Controlled Thermal Management Attributes

MNTMT 0x0 Minimum Thermal Management Temperature

MXTMT 0x0 Maximum Thermal Management Temperature

SANICAP 0x0 Sanitize Capabilities

ANATT 0xa ANA Transition Time

ANACAP 0x47 Asymmetric Namespace Access Capabilities

ANAGRPMAX 0x20 ANA Group Identifier Maximum

NANAGRPID 0x20 Number of ANA Group Identifiers

SQES 0x66 Submission Queue Entry Size

CQES 0x44 Completion Queue Entry Size

MAXCMD 0x100 Maximum Outstanding Commands

NN 0x100 Number of Namespaces

ONCS 0x1 Optional NVM Command Support

FUSES 0x1 Fused Operation Support

FNA 0x0 Format NVM Attributes

VWC 0x0 Volatile Write Cache

AWUN 0x0 Atomic Write Unit Normal

AWUPF 0x0 Atomic Write Unit Power Fail

NVSCC 0x0 NVM Vendor Specific Command Configuration

ACWU 0x0 Atomic Compare & Write Unit

SGLS 0x200002 SGL Support

How to Show the NVMe Identify Namespace Data

esxcli nvme namespace identify --namespace eui.21e816ae2707494d98a183e50273c922

Name Value Description

-------- -------------------------------- -----------

NSZE 0x4000000 Namespace Size

NCAP 0x4000000 Namespace Capacity

NUSE 0x4000000 Namespace Utilization

NSFEAT 0x1a Namespace Features

NLBAF 0x0 Number of LBA Formats

FLBAS 0x0 Formatted LBA Size

MC 0x0 Metadata Capabilities

DPC 0x0 End-to-end Data Protection Capabilities

DPS 0x0 End-to-end Data Protection Type Settings

NMIC 0x1 Namespace Multi-path I/O and Namespace Sharing Capabilities

RESCAP 0x0 Reservation Capabilities

FPI 0x0 Format Progress Indicator

DLFEAT 0x1 Deallocate Logical Block Features

NAWUN 0x0 Namespace Atomic Write Unit Normal

NAWUPF 0x0 Namespace Atomic Write Unit Power Fail

NACWU 0x0 Namespace Atomic Compare & Write Unit

NABSN 0x7 Namespace Atomic Boundary Size Normal

NABO 0x0 Namespace Atomic Boundary Offset

NABSPF 0x7 Namespace Atomic Boundary Size Power Fail

NOIOB 0x100 Namespace Optimal IO Boundary

NVMCAP 0x0 NVM Capacity

ANAGRPID 0x1 ANA Group Identifier

NSATTR 0x0 Namespace Attributes

NGUID 21e816ae2707494d98a183e50273c922 Namespace Globally Unique Identifier

EUI64 0a010a14c4062644 IEEE Extended Unique Identifier

LBAF0 0x90000 LBA Format 0 Support

How to Show the VAAI Status of Connected Storage

esxcli storage core device vaai status get

eui.1962194c4062644e0a010a0022c97302

VAAI Plugin Name:

ATS Status: supported

Clone Status: unsupported

Zero Status: supported

Delete Status: unsupported

How to List the Module Parameters for NVMe/TCP

Both the vmknvme and nvmetcp modules have parameters. Use esxcli

system module parameters list to retrieve them.

esxcli system module parameters list --module vmknvme

Name Type Value Description

------------------------------------- ---- ----- -----------

vmknvme_adapter_num_cmpl_queues uint Number of PSA completion queues for NVMe-oF adapter, min: 1, max: 16, default: 4

vmknvme_compl_world_type uint completion world type, PSA: 0, VMKNVME: 1

vmknvme_ctlr_recover_initial_attempts uint Number of initial controller recover attempts, MIN: 2, MAX: 30

vmknvme_ctlr_recover_method uint controller recover method after initial recover attempts, RETRY: 0, DELETE: 1

vmknvme_cw_rate uint Number of completion worlds per IO queue (NVMe/PCIe only). Number is a power of 2. Applies when number of queues less than 4.

vmknvme_enable_noiob uint If enabled, driver will split the commands based on NOIOB.

vmknvme_hostnqn_format uint HostNQN format, UUID: 0, HostName: 1

vmknvme_io_queue_num uint vmknvme IO queue number for NVMe/PCIe adapter: pow of 2 in [1, 16]

vmknvme_io_queue_size uint IO queue size: [8, 1024]

vmknvme_iosplit_workaround uint If enabled, qdepth in PSA layer is half size of vmknvme settings.

vmknvme_log_level uint log level: [0, 20]

vmknvme_max_prp_entries_num uint User defined maximum number of PRP entries per controller:default value is 0

vmknvme_total_io_queue_size uint Aggregated IO queue size of a controller, MIN: 64, MAX: 4096

esxcli system module parameters list --module nvmetcp

Name Type Value Description

----------------------- ---- ----- -----------

nvmetcp_collect_latency uint Enable/disable IO latency collection, 0: disabled (default), 1: enabled